基于 docker 的 k8s 部署

零、 环境介绍

- kubernetes v 1.20.0

- docker v 20.10.24

| 主机 | ip | 系统 | 配置 | 角色 |

|---|---|---|---|---|

| k8s-master | 192.168.100.200 | CentOS 7.9 | 2c4g 40GB | Master |

| k8s-node1 | 192.168.100.201 | CentOS 7.9 | 2c4g 40GB | Work |

| k8s-node2 | 192.168.100.202 | CentOS 7.9 | 2c4g 40GB | Work |

一、 环境配置

1.0 设置主机名

- 三台服务器都需要配置

hostnamectl set-hostname <hostname>

1.1 禁止 SWAP 分区

- 三台服务器都需配置

swapoff -ased -ri 's/.*swap.*/#&/' /etc/fstab

1.2 关闭防火墙

- 三台服务器都需配置

systemctl stop firewalld iptablessystemctl disable firewalld iptables

1.3 关闭 SeLinux

- 三台服务器都需配置

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/configsetenforce 0

1.4 配置主机名

- 三台服务器都需配置

cat >> /etc/hosts << EOF

192.168.100.200 k8s-master

192.168.100.201 k8s-node1

192.168.100.202 k8s-node2

EOF

1.5 配置时间同步

- 三台服务器都需配置

systemctl enable chronyd --now

1.6 配置网桥转发

三台服务器都需配置

cat >> /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

二、 安装 docker (所有节点)

配置 docker 阿里源

yum install -y yum-utils

yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo安装 docker-ce、 docker-ce-cli

yum install yum install docker-ce-19.03.15 docker-ce-cli-19.03.15配置 docker 自启

systemctl enable docker --nowsystemcyl enable containerd配置容器镜像加速

https://cr.console.aliyun.com/cn-hangzhou/instances/mirrorsmkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://nbtrfxxy.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

systemctl daemon-reload

systemctl restart docker

三、 安装 kubelet、kubectl、 kubeadm (所有节点)

- 配置 kubernetes 阿里源

cat <<'EOF' > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF - 安装 kubelet kubeadm kubectl

yum install -y kubelet-1.20.0 kubeadm-1.20.0 kubectl-1.20.0 --nogpgcheck

3.1 初始化 Master 节点 (k8s-master)

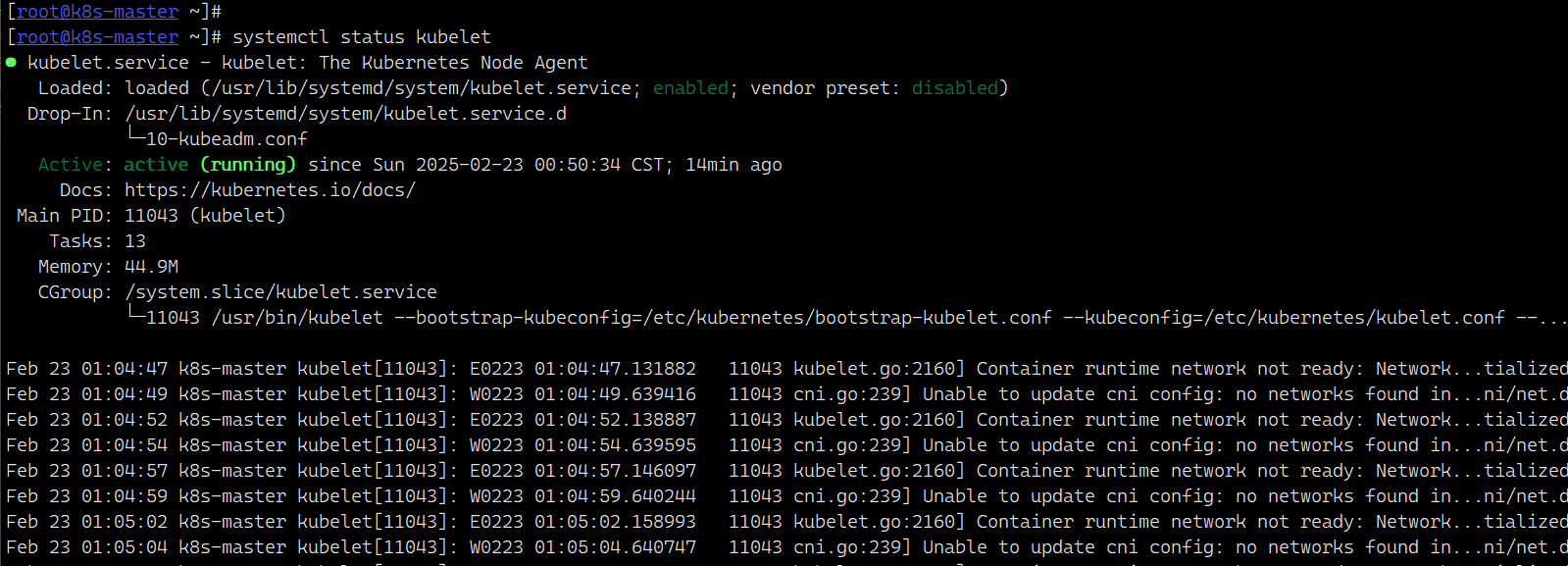

配置自启

systemctl enable kubelet --now导出配置文件

kubeadm config print init-defaults --kubeconfig ClusterConfiguration > kubeadm.yml确定镜像

kubeadm config images list --config kubeadm.yml下载镜像

kubeadm config images list --config kubeadm.yml | xargs -I {} docker pull {}启动

kubeadm init \

--apiserver-advertise-address=192.168.100.200 \

--control-plane-endpoint=k8s-master \

--image-repository k8s.gcr.io \

--kubernetes-version v1.20.0 \

--service-cidr=10.96.0.0/16 \

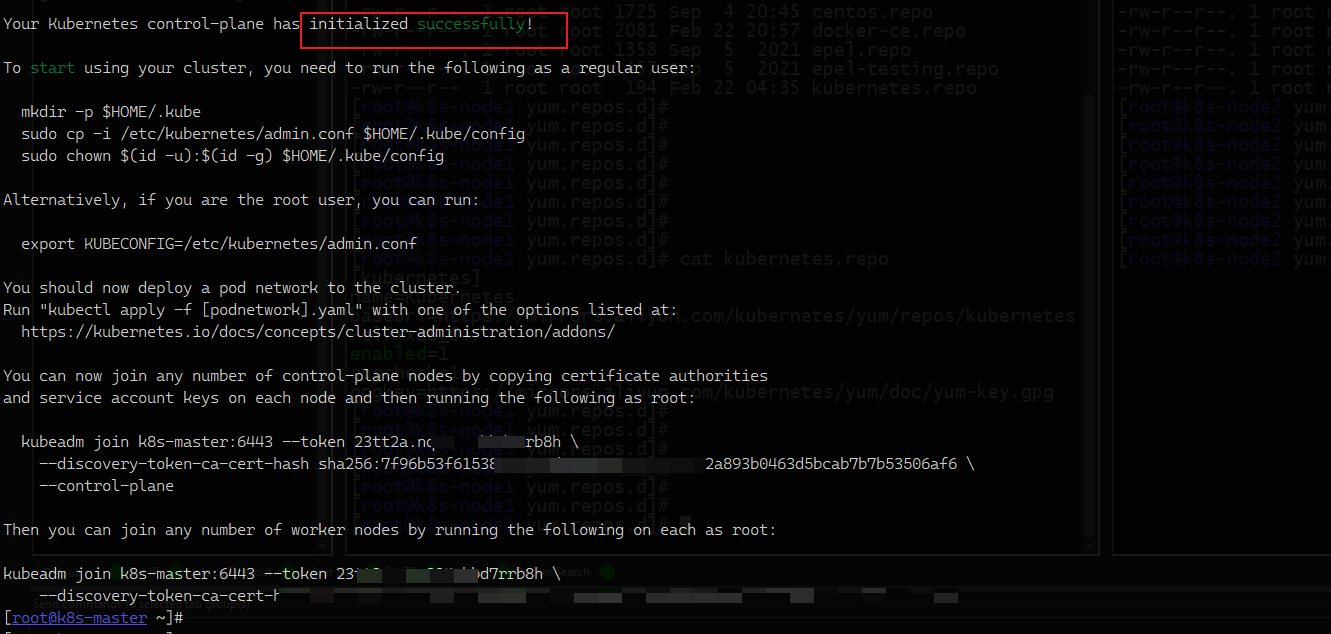

--pod-network-cidr=192.168.0.0/16如下表明安装成功

配置 kubeconfig

mkdir -p $HOME/.kubecp -i /etc/kubernetes/admin.conf $HOME/.kube/configchown $(id -u):$(id -g) $HOME/.kube/configecho "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

四、 部署Calico(k8s-master)

Calico 和 k8s 版本对应关系

https://docs.tigera.io/archive/v3.21/getting-started/kubernetes/requirements获取 Calico

curl https://docs.projectcalico.org/archive/v3.21/manifests/calico.yaml -O安装 Calico

kubectl apply -f ./calico.yaml查看安装

[root@k8s-master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-5bb48c55fd-pxnbm 1/1 Running 0 2m14s

kube-system calico-node-4fd75 1/1 Running 0 2m14s

kube-system coredns-74ff55c5b-k6zz8 1/1 Running 0 6h10m

kube-system coredns-74ff55c5b-rvsz4 1/1 Running 0 6h10m

kube-system etcd-k8s-master 1/1 Running 1 6h11m

kube-system kube-apiserver-k8s-master 1/1 Running 1 6h11m

kube-system kube-controller-manager-k8s-master 1/1 Running 1 6h11m

kube-system kube-proxy-zqnwd 1/1 Running 1 6h10m

kube-system kube-scheduler-k8s-master 1/1 Running 1 6h11m[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 6h11m v1.20.0

五、 node 节点加入 master (在两台node节点上操作)

获取 kubeadm token

在步骤3.1中初始化 master 的时候生成了 token, 有效期24小时, 如果忘记了初始token, 可以使用一下方法获取

输出当前 token

kubeadm token list重新生成 token

kubeadm token create --print-join-command获取集群根证书的哈希值

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | \

openssl rsa -pubin -outform der 2>/dev/null | \

openssl dgst -sha256 -hex | \

sed 's/^.* //'

5.1 加入集群

在 node1 和 node2 上执行下面命令

kubeadm join k8s-master:6443 --token 23tt2a.nqn384abbd7rrb8h \

--discovery-token-ca-cert-hash sha256:7f96b53f61538846515d5905c8073a3919362a893b0463d5bcab7b7b53506af6[root@k8s-node1 ~]# kubeadm join k8s-master:6443 --token 23tt2a.nqn384abbd7rrb8h \

> --discovery-token-ca-cert-hash sha256:7f96b53f61538846515d5905c8073a3919362a893b0463d5bcab7b7b53506af6

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

5.2 验证集群状态

k8s-msater 上查看状态

kubectl get cs[root@k8s-master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

etcd-0 Healthy {"health":"true"}参考解决办法 :https://stackoverflow.com/questions/54608441/kubectl-connectivity-issue

k8s-master中的 /etc/kubernetes/manifests/kube-controller-manager.yaml和/etc/kubernetes/manifests/kube-scheduler.yaml两个文件中的--port=0注释掉 , 然后重启 kubelet 服务[root@k8s-master manifests]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}[root@k8s-master manifests]# kubectl cluster-info

Kubernetes control plane is running at https://k8s-master:6443

KubeDNS is running at https://k8s-master:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

[root@k8s-master manifests]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 6h49m v1.20.0

k8s-node1 Ready <none> 16m v1.20.0

k8s-node2 Ready <none> 13m v1.20.0

六、 额外

k8s 有太多命令和参数, 为了避免低级错误, 建议使用

bash-completion补齐命令

安装 bash-completion

yum install -y bash-completion配置

source /usr/share/bash-completion/bash_completionecho "source <(kubectl completion bash)" >> /etc/profile